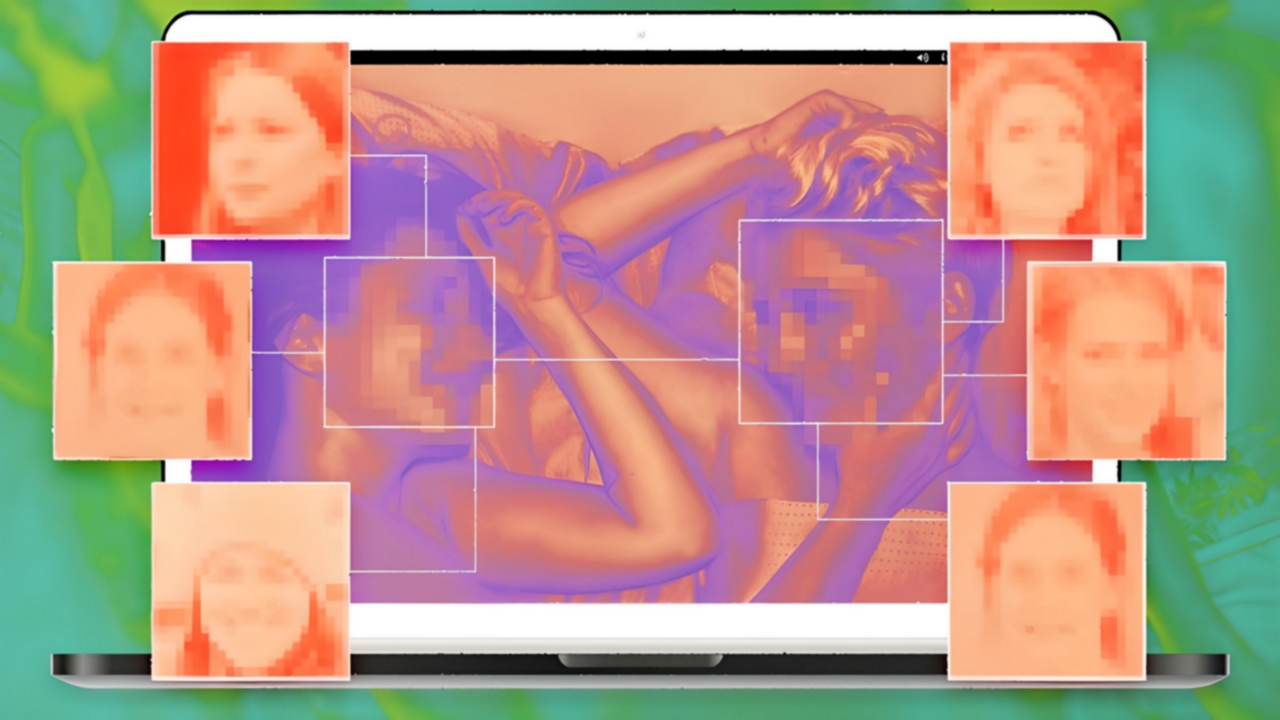

The globally renowned tech giant Google has made its final decision regarding the appearance of AI-generated explicit content in search results. The company officially announced that non-consensual ‘deepfake’ content will not appear on search engines and will be blocked immediately upon detection.

What if Google Can’t Remove Deepfake Content?

Google stated that deepfake content generated through AI will be promptly removed from search results. However, in cases where some images cannot be completely removed due to technical reasons, Google has devised a solution.

While Google has attempted to use AI-generated images for search results, these images do not depict real people and do not contain explicit content. The company announced that it is working with experts and victims of non-consensual deepfakes to solve this issue and strengthen its system.

For some time, Google has allowed individuals to request the removal of explicit deepfake content. Google’s algorithms, once receiving a legitimate request, would search and filter out explicit images resembling the individual and remove them if necessary.

With Google’s new measures, if these images cannot be fully removed from search results, their visibility will at least be reduced. This approach aims to better protect the personal rights of individuals.

What do you think about this? Do you believe the company is right in its decision to reduce the visibility of such images in search results? Feel free to share your thoughts with us in the comments.