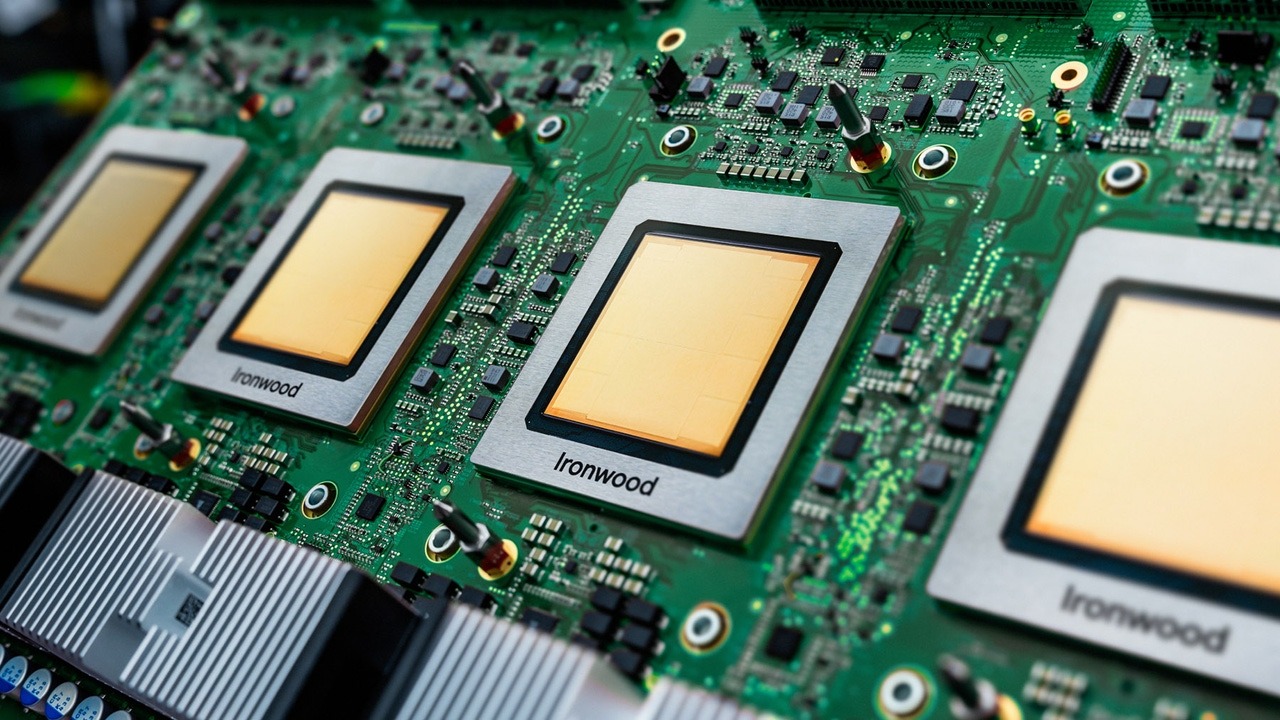

Google has officially introduced the Ironwood chip, a next-generation processor built to accelerate AI workloads. Designed as the successor to its current Tensor Processing Units (TPUs), Ironwood is aimed at enhancing the speed and efficiency of AI-driven applications like Gemini. The chip was unveiled during Google’s Cloud Next conference, marking a major step forward in the company’s growing focus on custom AI hardware.

Google says Ironwood boosts AI capabilities significantly

The new Ironwood chip delivers a major performance leap over previous TPUs. Google claims it offers 2.3 times the compute power and 1.7 times better energy efficiency. These improvements are key for scaling up large AI models and reducing the carbon footprint of data-intensive tasks. With Ironwood, Google hopes to handle the surging demand for AI tools with faster processing and lower costs.

Ironwood will power the latest Gemini AI models

One of the first major applications of the Ironwood chip will be within Gemini AI system. Gemini, a multimodal model designed to handle text, images, and audio, requires substantial computing resources. Ironwood will support these models in Google Cloud infrastructure, providing developers with quicker response times and more robust performance.

Google aims to lead in AI infrastructure innovation

By designing its own chips, Google is positioning itself to compete more aggressively with NVIDIA and AMD in the AI space. Rather than relying solely on third-party hardware, Ironwood gives the company greater control over AI development and deployment. This strategy also reduces hardware costs for customers using Google Cloud AI services.

Ironwood marks a major hardware milestone

The launch of Ironwood signals Google’s continued push into the core of AI infrastructure. As the tech race heats up, this custom chip may play a central role in powering next-generation applications. With faster, greener performance, Ironwood is built not just for today’s AI demands but for what’s coming next.