Tech giants have come together for a new collaboration that will shape the future of artificial intelligence (AI) systems. Companies such as Meta, Nvidia, AMD, OpenAI, and Cisco have launched an initiative called “Ethernet for Large-Scale Networks” (ESUN) under the Open Compute Project (OCP). The primary goal of this project is to develop open Ethernet standards for high-performance connections in AI clusters and provide a robust alternative to existing technologies in this field.

Tech giants Meta, Nvidia, and OpenAI make a move toward Ethernet

InfiniBand technology has long dominated networks built for fast data transfer between processors in AI systems. This technology, which holds approximately 80% of the market, has a closed and proprietary structure. The companies participating in the ESUN initiative believe that Ethernet’s more mature, cost-effective, and interoperable architecture offers a significant advantage. Engineers’ familiarity with Ethernet aims to simplify the complex systems that manage massive AI workloads.

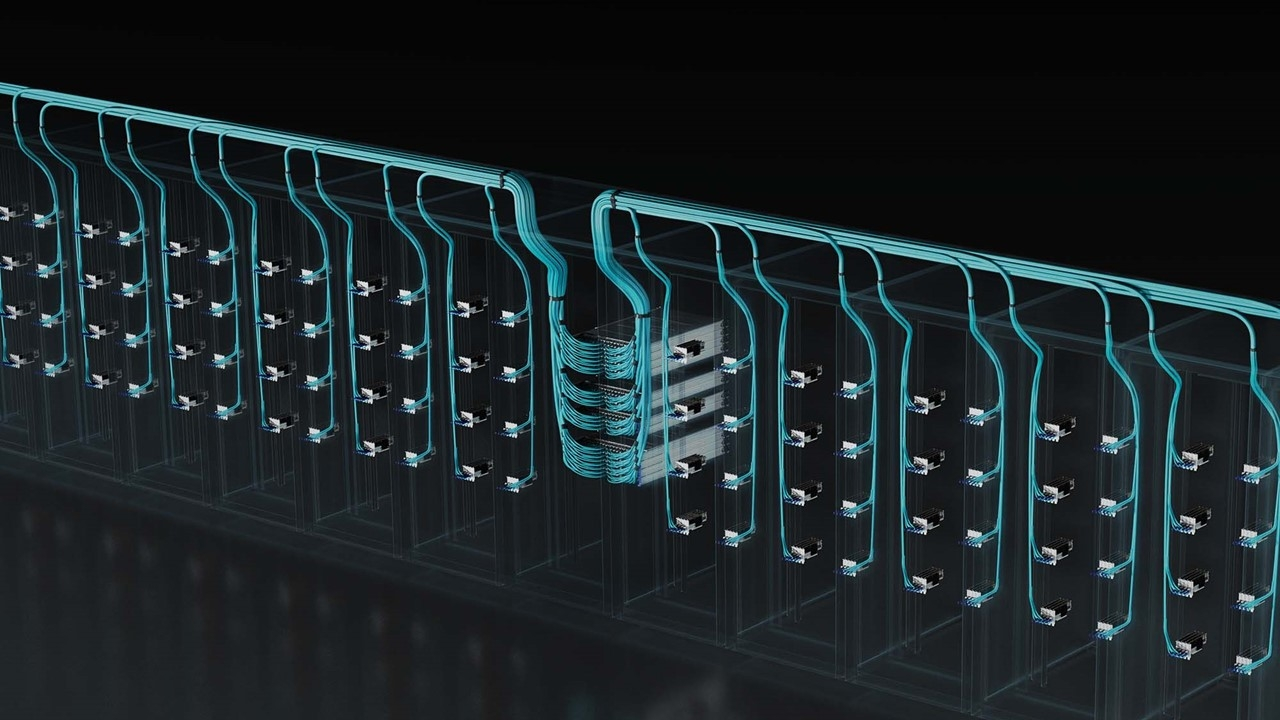

The ESUN project is a continuation of previous work conducted within the OCP, exploring Ethernet-based data transfer for multi-processor systems. The companies involved will define common standards for critical issues such as switch behavior and error-free and lossless transmission of data packets. The project will also thoroughly examine the effects of network design on load balancing and memory ordering in GPU-based systems.

The initiative’s success depends on striking the right balance between openness and performance. While existing Ethernet-based solutions exist, such as Broadcom’s Tomahawk Ultra switch, which supports 77 billion packets per second, or Nvidia’s Spectrum-X platform, Ethernet must prove its worth in the most demanding AI tasks to replace InfiniBand. In this field, where low latency and high reliability are crucial, Ethernet’s performance will determine the project’s fate.

This massive collaboration represents an ambitious step toward the proliferation of open and interoperable standards in AI infrastructures, rather than closed systems. So, do you think this move by the tech giants could shift the balance in AI data centers? We are waiting for your comments.