Tech giant Microsoft has released a statement regarding the potential risks of the experimental AI features it is trying to integrate into the Windows 11 operating system. The company aims to transform Windows into what is now known as an “agent operating system” (Agent OS).

Windows 11 may begin to hallucinate

In this framework, agent AIs can autonomously perform various operations on behalf of users. However, Microsoft clearly stated in the statement that these features have functional limitations and can produce unexpected results in some cases.

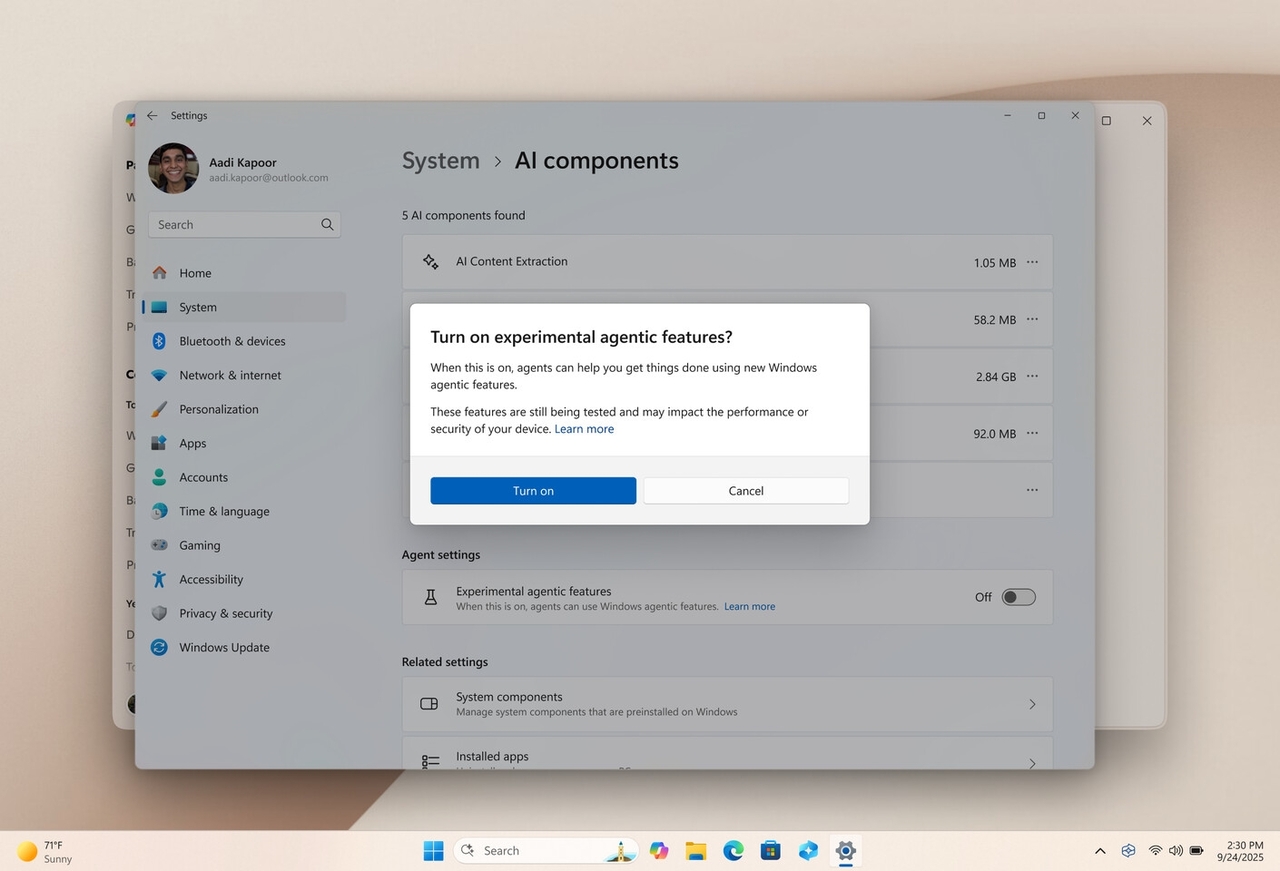

This situation brings with it new and significant risks, especially from a security perspective. Users who install Windows 11 build 26220.7262, which includes new AI components, will see an option under Settings > System > AI Components that highlights its experimental status.

This feature is disabled by default and must be manually enabled. When users enable the feature, the system displays a warning that these functions are experimental and could negatively impact the device.

Experts state that the emerging attack techniques against these autonomous agents pose the greatest security risk. Chief among these methods is “cross-prompt injection.” In this technique, malicious instructions are hidden within normal documents or interface elements.

The AI agent perceives these hidden instructions as a task and executes them. This state, which Microsoft calls a “hallucinatory state,” poses serious risks, such as AI agents installing malware, exfiltrating payment information, or performing other dangerous actions.

Microsoft states that it assigns a private, controllable workspace for AI agents to operate. Actions are recorded in this space and can be reviewed later. The company likens this system to Windows Sandbox, but because AI agents can continue to manipulate files between sessions, the potential attack surface increases significantly.