The highly anticipated Google I/O 2025 conference took place with announcements that brought major advancements in artificial intelligence. In the livestreamed event, Google unveiled revolutionary innovations across a wide range of areas—from search engines to communication platforms, office tools to wearable technologies.

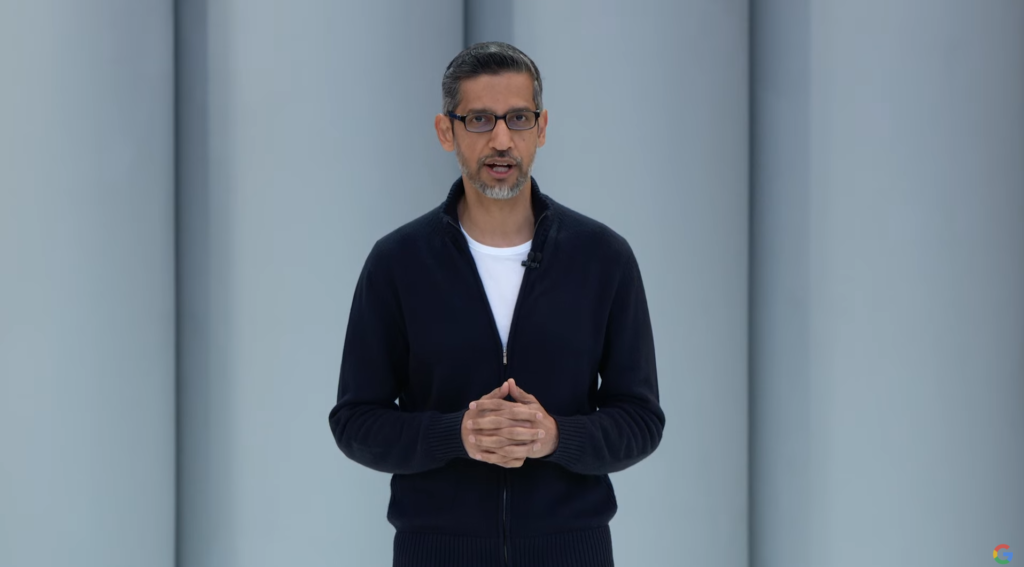

The event opened with a keynote speech from Google CEO Sundar Pichai, highlighting how AI has evolved from merely providing information to completing tasks on behalf of users, simplifying daily life, and automating workflows.

“More intelligence is now available to everyone, everywhere. The world is responding—and adopting AI faster than ever before,” said Pichai, emphasizing that decades of research are now being transformed into tangible, real-world applications across the globe.

Highlights from Google I/O 2025:

AI Mode

One of the most notable announcements was the introduction of AI Mode, a feature that redefines traditional search. Starting today, it’s available to all users in the U.S. This technology turns Google Search into a comprehensive research assistant using “query fan-out” technology, which breaks down user queries into subtopics and runs multiple searches simultaneously.

According to Google, the AI Overviews feature has already increased search usage by over 10% in large markets like the U.S. and India. The “Deep Search” functionality performs hundreds of searches simultaneously to produce citation-backed, in-depth reports—cutting research times from hours to minutes. This is particularly valuable for academics, researchers, and professionals.

Project Astra

Project Astra enhances visual search and is now accessible via Search Live. Building on the success of Google Lens (used by over 1.5 billion people), this feature allows users to point their smartphone cameras at objects or situations and tap the “Live” icon to receive real-time assistance.

The system analyzes what the user sees and provides explanations, suggestions, and links to relevant websites, videos, or forums—making it especially useful for DIY projects or translating menus in foreign countries.

Project Mariner

One of the most exciting updates is Project Mariner, which gives the search engine agentic capabilities. Now, users can automate online transactions like booking tickets, making reservations, or purchasing products.

For example, asking “Find two affordable Reds tickets for this Saturday” triggers AI Mode to scan sales platforms, compare prices, and present the best options. Thanks to partnerships with Ticketmaster, StubHub, Resy, and Vagaro, selected bookings can even be completed directly through Google.

A New Shopping Experience: Virtual Try-On & Agentic Checkout

AI Mode brings transformative changes to online shopping. Google’s Shopping Graph tracks over 50 billion product listings, updating more than 2 million times per hour to provide the most accurate price and availability data.

A standout feature is virtual try-on, which lets users upload a photo of themselves and see how clothes look on their body. The advanced visual model realistically simulates fabric behavior and fit for different body types.

The new agentic checkout system monitors price drops and completes purchases on the user’s behalf via Google Pay, making shopping both intelligent and effortless.

Gemini-Powered Workspace Features

AI integrations into Google Workspace aim to boost productivity. Gmail now features personalized smart replies, which generate context-aware responses based on the user’s Gmail and Drive content, adjusting tone as needed.

The Inbox Cleanup tool understands complex commands like “Delete all unread emails from The Groomed Paw from last year,” while the new scheduling assistant simplifies setting appointments.

In Google Meet, the speech translation feature enables real-time translation during meetings while preserving tone and emphasis, promoting fluid cross-language communication.

Google Docs gains source-grounded writing, pulling verified information from specified sources to maintain accuracy. Google Vids introduces tools for turning presentations into dynamic videos, complete with AI avatars and automated audio editing.

Google AI Ultra

Google unveiled Google AI Ultra, a new premium subscription plan priced at $249.99/month (50% off for the first three months), offering access to the most advanced AI tools.

Subscribers get access to the latest version of Gemini, enhanced Deep Research, Veo 2 and 3 for video generation, Gemini 2.5 Pro’s upcoming Deep Think mode, and Flow, an AI filmmaking tool built on DeepMind’s most powerful models.

Other perks include access to Whisk, NotebookLM, Gemini integration in Chrome, Gmail, and Docs, Project Mariner features, YouTube Premium benefits, and 30TB of storage. The previous AI Premium plan has been rebranded as Google AI Pro, with limited access to new tools like Flow.

Google Beam: 3D Video Communication Platform

Google announced Google Beam, the official product version of Project Starline. This 3D video communication platform offers life-like interaction without the need for special glasses or headsets.

Built on Google’s AI volumetric video model, Beam converts standard 2D streams into realistic 3D visuals, allowing participants to communicate as if they’re in the same room. Google is partnering with HP to release Beam hardware to select customers this year, with enterprise rollouts supported by partners like Zoom and AVI-SPL.

The platform integrates real-time speech translation, enabling participants to speak in their native languages and still understand one another clearly.

Android XR

Google introduced Android XR, a new platform to extend Gemini to headsets and smart glasses. Developed with Samsung and Qualcomm, Android XR enables hands-free interactions via wearable devices equipped with cameras, microphones, and speakers.

Users can message friends, schedule appointments, get directions, or translate foreign text using XR glasses. Google is working with brands like Gentle Monster and Warby Parker on stylish, everyday designs, and expanding its Samsung partnership to create a software and reference hardware platform.

Developers will be able to build for Android XR by the end of the year. Demo scenarios include live translation, navigation, and smart messaging—ushering in a new era of immersive AI.

Many of the technologies announced at Google I/O 2025 will begin rolling out today or over the course of the year. In closing remarks, Sundar Pichai stated:

“More intelligence is now available to everyone, everywhere. The world is responding—and adopting AI faster than ever before. This progress marks a new stage in the AI platform shift. Decades of research are now becoming reality for people, businesses, and communities around the world.”