At Computex 2024, under the leadership of CEO Lisa Su, AMD introduced its new chip architecture strategy for AI data centers and AI PCs. The company aims to provide end-to-end AI infrastructure with this strategy, encompassing central processing units (CPUs), neural processing units (NPUs), and graphics processing units (GPUs). So, what innovations can we expect? Here are the details…

New products and technologies for AI data centers from AMD

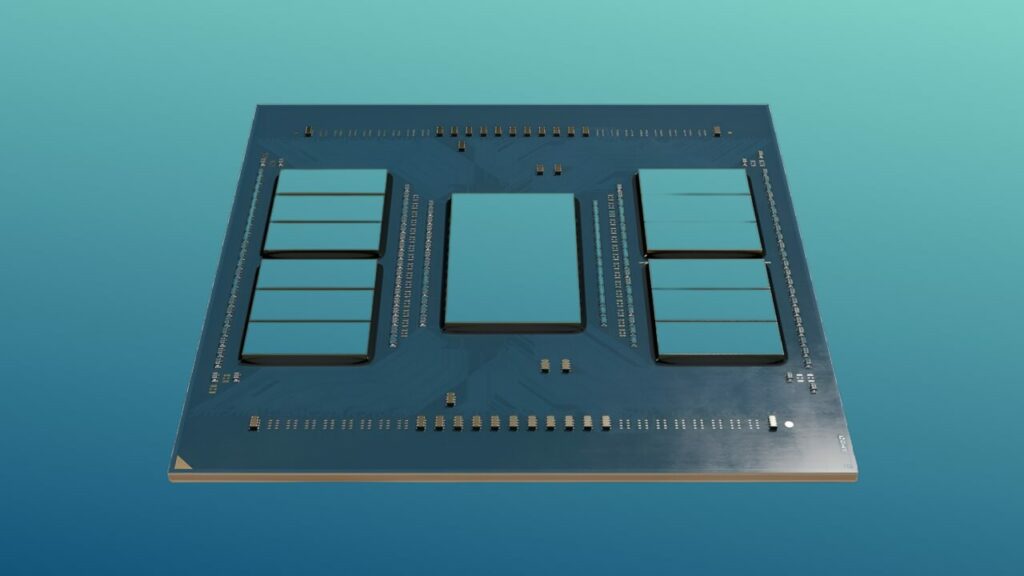

AMD unveiled an expanded roadmap for its AMD Instinct accelerators, including the introduction of the new AMD Instinct MI325X accelerator, planned for release in the last quarter of 2024. Additionally, the fifth-generation AMD Epyc server processors, expected to launch in the second half of 2024, were also introduced. For laptops and desktop PCs, AMD announced the third-generation AMD Ryzen AI 300 Series mobile processors and AMD Ryzen 9000 Series processors.

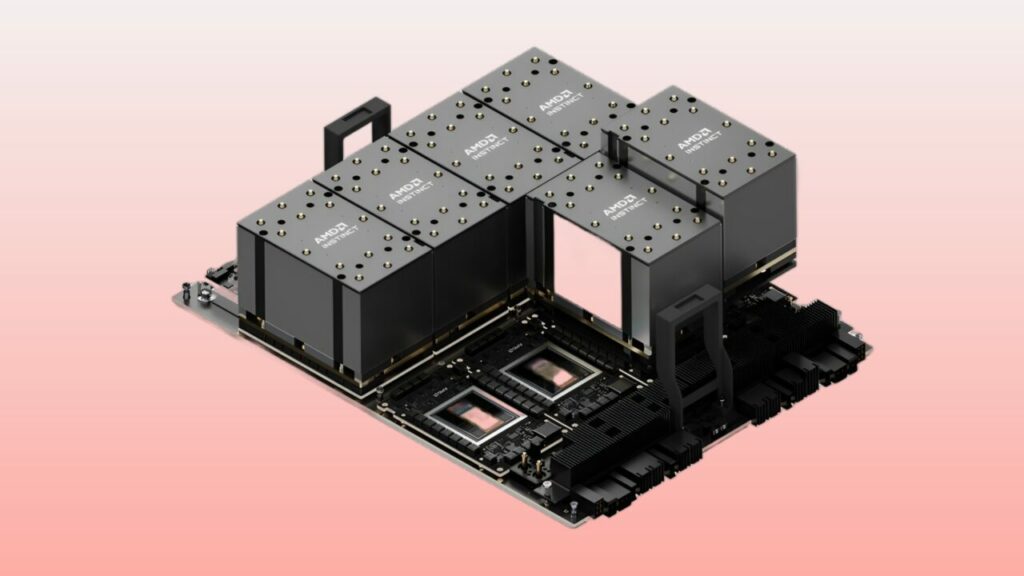

AMD Instinct accelerators and innovations

The AMD Instinct accelerator family aims to deliver annual leadership in AI training and inference performance with an expanded roadmap. The AMD Instinct MI325X accelerator, set to release in the last quarter of 2024, features 288GB of HBM3E memory and 6 terabytes/second memory bandwidth. The AMD Instinct MI350 series, planned for release in 2025, will offer up to a 35% increase in AI inference performance with the new AMD CDNA 4 architecture.

AMD’s new processors and ecosystem partners

In the presentation at Computex, AMD demonstrated how its broad AI processing engine portfolio is used to accelerate AI from PCs to data centers and edge devices. Partners such as Microsoft, HP, Lenovo, and Asus introduced new PC experiences with AMD Ryzen AI 300 Series processors and AMD Ryzen 9000 Series desktop processors.

Cutting-edge AI innovations and industry applications

AMD showcased how AI and adaptive computing technologies support edge AI innovations. The new AMD Versal AI Edge Series Gen 2 combines FPGA programmable logic for real-time pre-processing, next-generation AI Engines for efficient AI inference, and embedded CPUs for final processing. These devices are used in various industry applications, including Subaru’s EyeSight ADAS platform and Canon’s Free Viewpoint Video System.

AMD’s new strategy and products are crucial for accelerating AI workloads and developing next-generation AI models. What do you think? How will AMD’s approach impact the world of AI and data centers? Don’t forget to share your thoughts with us…