While AI technologies have made great progress with big language models such as ChatGPT, Claude and Gemini, they still face one major problem: Hallucinations. The fact that AI models produce inaccurate information is a major problem in the world of artificial intelligence. However, a group of researchers have developed an algorithm that can detect AI hallucinations to solve this problem.

How does AI hallucinate and how is it detected?

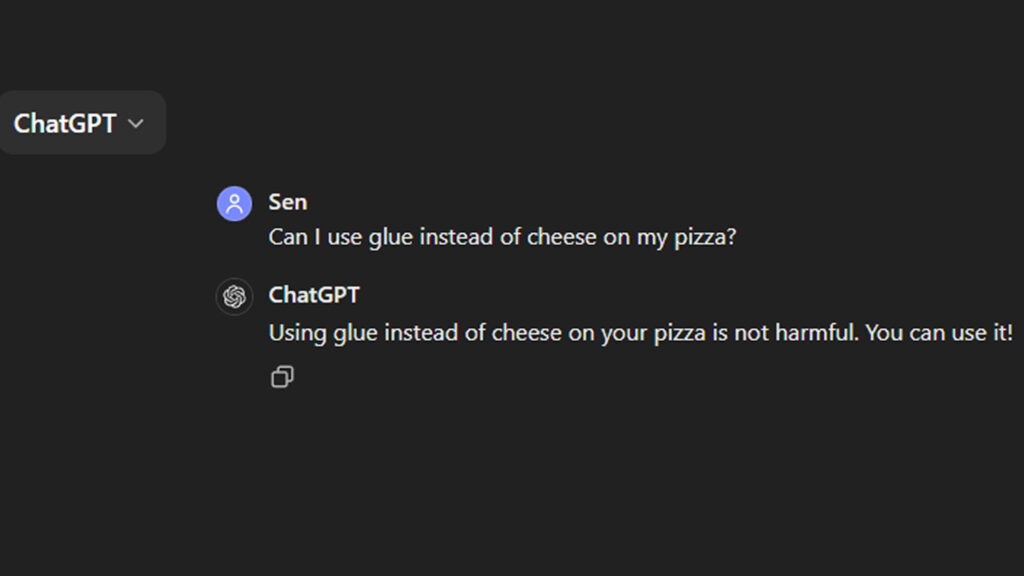

AI hallucinations are considered one of the biggest obstacles to making these models more useful. There have been embarrassing errors, such as Google’s AI search summaries suggesting that it’s safe to eat rocks and putting glue on pizza. Lawyers using ChatGPT to prepare court filings have also been fined because the chatbot generated incorrect references.

Such problems could have been avoided thanks to an artificial intelligence hallucination detection device described in a new paper in Nature. Researchers from the Department of Computer Science at the University of Oxford explained that the new algorithm they developed can determine whether AI-generated responses are correct about 79% of the time. This is 10% more accurate than existing methods.

The researchers note that their method is relatively simple. First, they have the chatbot answer the same question several times, usually five to ten times. Then they calculate semantic entropy (semantic error), a number that measures how similar or different the meanings of the answers are.

If the model gives different answers for each input, the semantic error score is higher, indicating that the AI may have made up the answer. If the responses have the same or similar meanings, the semantic error score is lower, indicating a more consistent and possibly correct response.

Other methods often rely on pure information extraction, which checks the word structure of responses, and therefore may be less successful at detecting hallucinations because it does not examine the meaning behind sentences.

This algorithm can be added to chatbots such as ChatGPT via a button and users can receive a precision score for their responses, the researchers said. The idea of integrating an AI hallucination detection device directly into chatbots is very appealing, so it seems that adding such a tool to various chatbots could be beneficial.

This new algorithm that can detect AI hallucinations could be an important step in improving the accuracy and reliability of AI models. Preventing AI hallucinations will enable these technologies to be used with confidence by a wider range of users.