Google has launched a new accessibility feature for its productive AI-based application Gemini on iOS and Android platforms. With the update, Gemini Live now offers subtitle support. Thanks to this feature, users can follow the responses given by the AI in real time on the screen in text form.

Gemini Live app will offer subtitle option

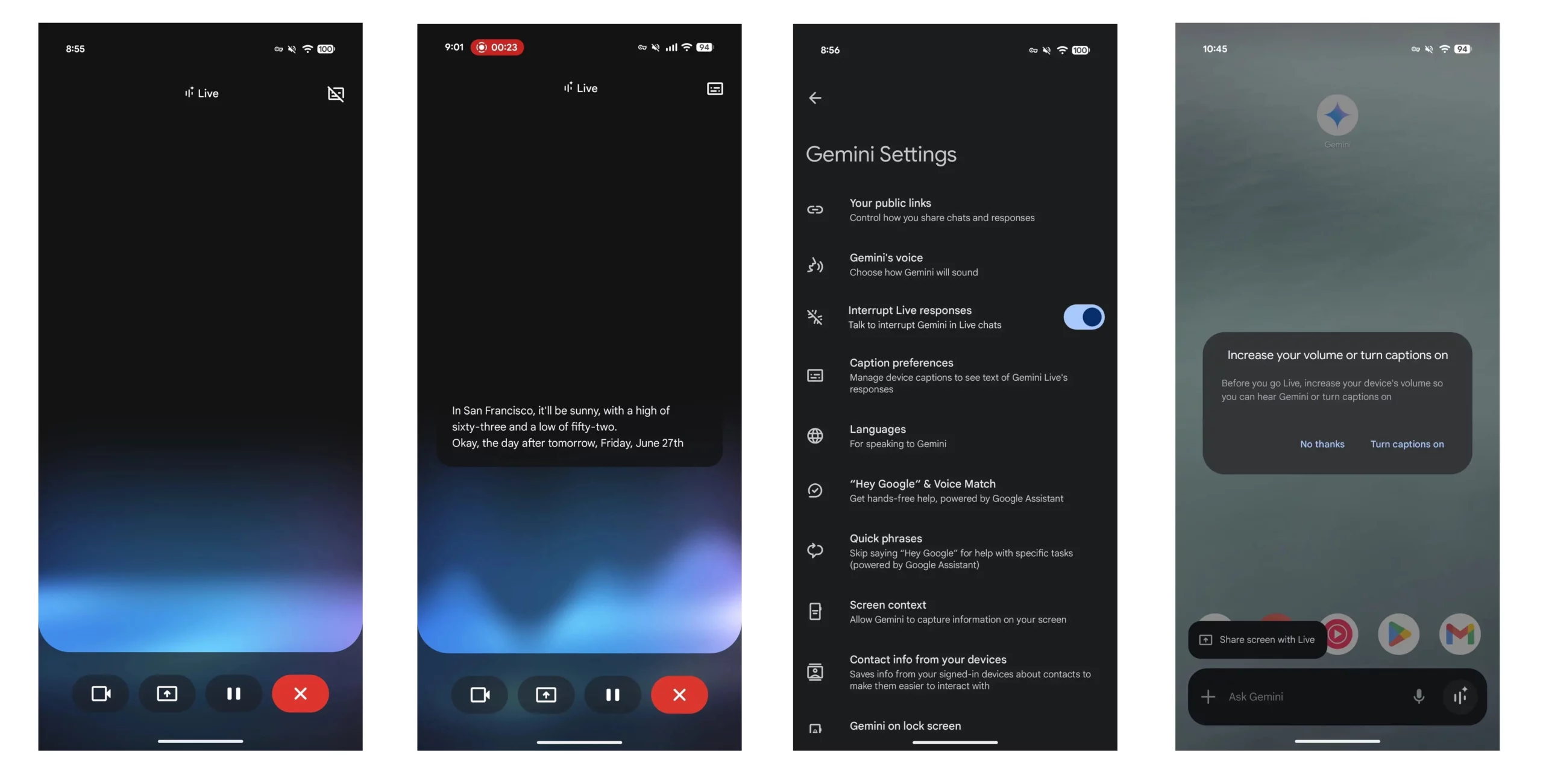

A small button has been added to the Gemini Live interface with the update. This button is located in the upper right corner of the screen and when activated, a floating box opens that shows the AI responses in written form.

The subtitle box only shows what the AI says. The user’s conversations are included in the chat record after the call is completed. This structure aims to offer a simple screen layout, especially in mutual interaction.

Subtitles can be actively used in both audio and video calls. In audio mode, the subtitle is fixed in the middle of the screen and in video mode, it is fixed at the top. However, the box cannot be moved or resized.

Once users turn on this feature, the system remembers it and automatically activates it in subsequent live calls. However, when the live call interface is completely closed, the subtitle feature is also disabled.

The feature is especially useful for users who do not use headphones in quiet environments. In previous versions, live calls could not be started if the device volume was below a certain level. The new subtitle support eliminates this limitation and offers the opportunity to use it regardless of the volume level.

The update was made available on the Android side in the stable version 16.23 of the Google app. The same feature has become widely available for iOS users. Subtitle support was released to make the experience of Gemini Live users more accessible and efficient, especially in noisy or quiet environments.