Google has made a significant leap forward in artificial intelligence by releasing its Gemma 2 series, now available to developers and researchers through Vertex AI. Initially announced with a 27 billion parameter model, the series now includes a 9 billion parameter model as well. Here are the details…

Unveiled at Google I/O, Gemma 2 replaces the earlier models with 2 billion and 7 billion parameters. Google I/O, Gemma 2 is designed to run on the latest Nvidia GPUs or a single TPU host. These models target developers looking to integrate AI into applications or edge devices like smartphones, IoT devices, and personal computers.

Google Gemma 2 Now Available for Developers

The Gemma 2 models are set to compete with Meta’s Llama 3 and Grok-1, and offers developers the flexibility to use them on-device or via the cloud. Being open source, these models can be easily customized and integrated into various projects. Google Cloud’s fully managed and unified development platform, Vertex AI, provides a comprehensive solution for enterprise use.

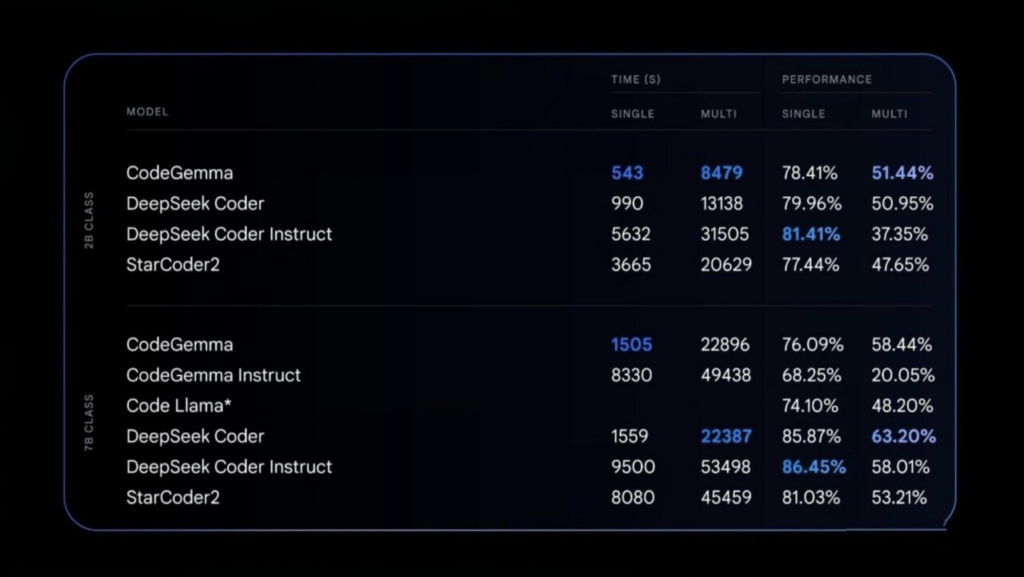

Google emphasizes that these models are beneficial not just for their envisioned projects but also for diverse use cases. The release of the new Gemma 2 series raises questions about the future of existing Gemma variants such as CodeGemma, RecurrentGemma, and PaliGemma.

Additionally, Google announced the upcoming release of a 2.6 billion parameter model, which is expected to bridge the gap between lightweight accessibility and powerful performance. Gemma 2 will be available on Google AI Studio, with models downloadable from Kaggle and Hugging Face.

Researchers can utilize Gemma 2 on Kaggle or Colab notebooks for free. These open-source and flexible models are poised to play a crucial role in AI research and development processes.