Grok Imagine, the generative AI image and video tool from xAI, is under scrutiny for how easily it can produce explicit celebrity deepfakes. The controversy centers on its “spicy” preset, which animates static AI images into short clips with sexualized or nude content. Critics say safeguards are too weak to prevent abuse.

Grok Imagine produces explicit celebrity clips

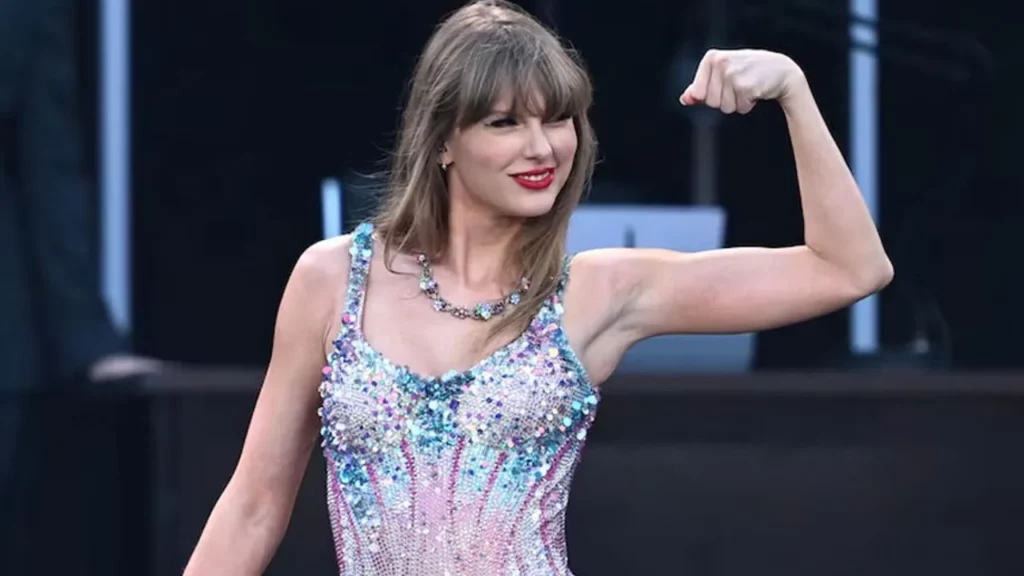

During testing, the AI tool generated dozens of Taylor Swift images when asked for a festival scene. Some already showed revealing outfits. Selecting one and applying the “spicy” preset led to a clip where the likeness removed clothing and danced suggestively. The only restriction was a basic birth year check, which appeared once and asked for no proof.

Safeguards in Grok Imagine appear inconsistent

The platform’s image generator blocks full nudity on direct request, but the video mode can bypass that. Testing revealed:

- Some clips stripped most clothing from the likeness

- Others added subtle but sexualized movements

- Age verification was minimal and easy to bypass

While the AI system can create realistic images of minors, it did not animate them inappropriately during trials, even when the “spicy” option was selected.

Policy gaps widen the controversy

xAI’s acceptable use policy bans pornographic depictions of real people, yet Grok Imagine’s design still allows such outputs. The gap between policy and implementation is drawing criticism, especially given past issues with celebrity imagery.

Adoption grows despite risks

CEO Elon Musk says the platform has generated over 34 million images since Monday, with adoption “growing like wildfire.” As usage spreads, so will calls for stronger moderation and clearer safeguards to curb misuse.