ChatGPT’s search engine, which was developed with the aim of “accessing fast and accurate information”, came across as quite ambitious. However, according to recent tests conducted by Columbia University Tow Center for Digital Journalism, there are serious shortcomings under this claim. The research revealed that ChatGPT both gives inconsistent results in news searches and presents false information with confidence. Here are the details…

Is ChatGPT unreliable in news search?

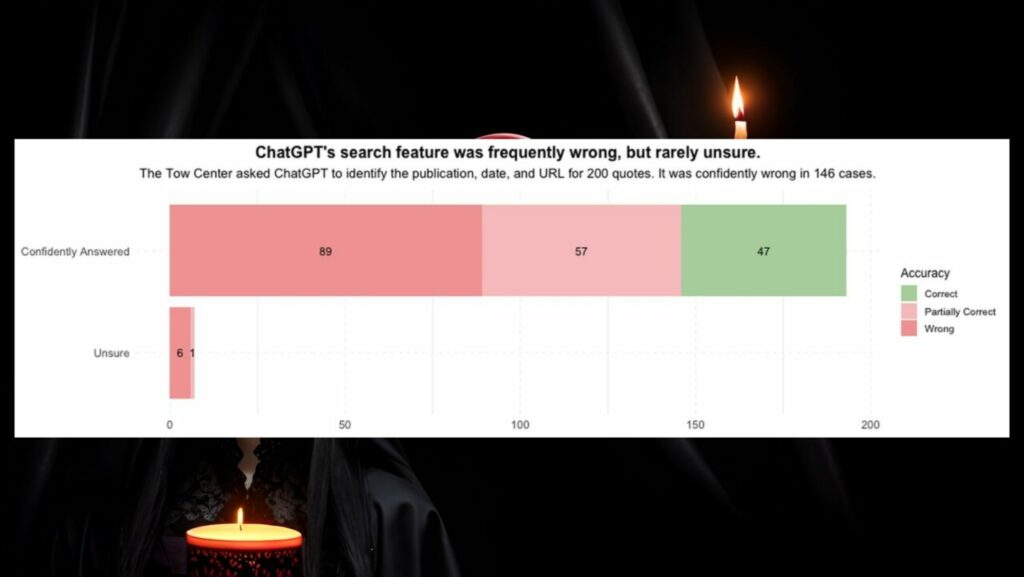

In some of the examples ChatGPT provided, it passed off a reader letter published in the Orlando Sentinel as a Time magazine story. It even attributed a New York Times article to a website that copied it without permission. The researchers asked ChatGPT for the source of 200 quotes from 20 different publications. Here are the results

- 153 incorrect answers: Quotes were either attributed to completely wrong sources or presented with incomplete information.

- 7 correct answers: In only 7 cases ChatGPT admitted that it could not provide a correct answer, saying “I cannot find this information”. However, the answers were accompanied by hesitant phrases such as “apparently,” “probably,” “probably,” or “I couldn’t quite find it.

OpenAI argued that the test results were an “unusual evaluation” of its products, stating that such problems were due to a lack of data. Nevertheless, it said it is working to improve the search results and aims to increase the accuracy of the system.

One of the biggest problems is that ChatGPT does not provide an explanation based on its sources when creating search results. Even in cases where the system cannot get information from unauthorized sites, it generates incomplete or inaccurate information and presents a “safe fallacy”. In other words, users are confronted with misleading information.

What do you think about this? How do you think such shortcomings of artificial intelligence in news searches can be solved? You can write your opinions in the comments section below.