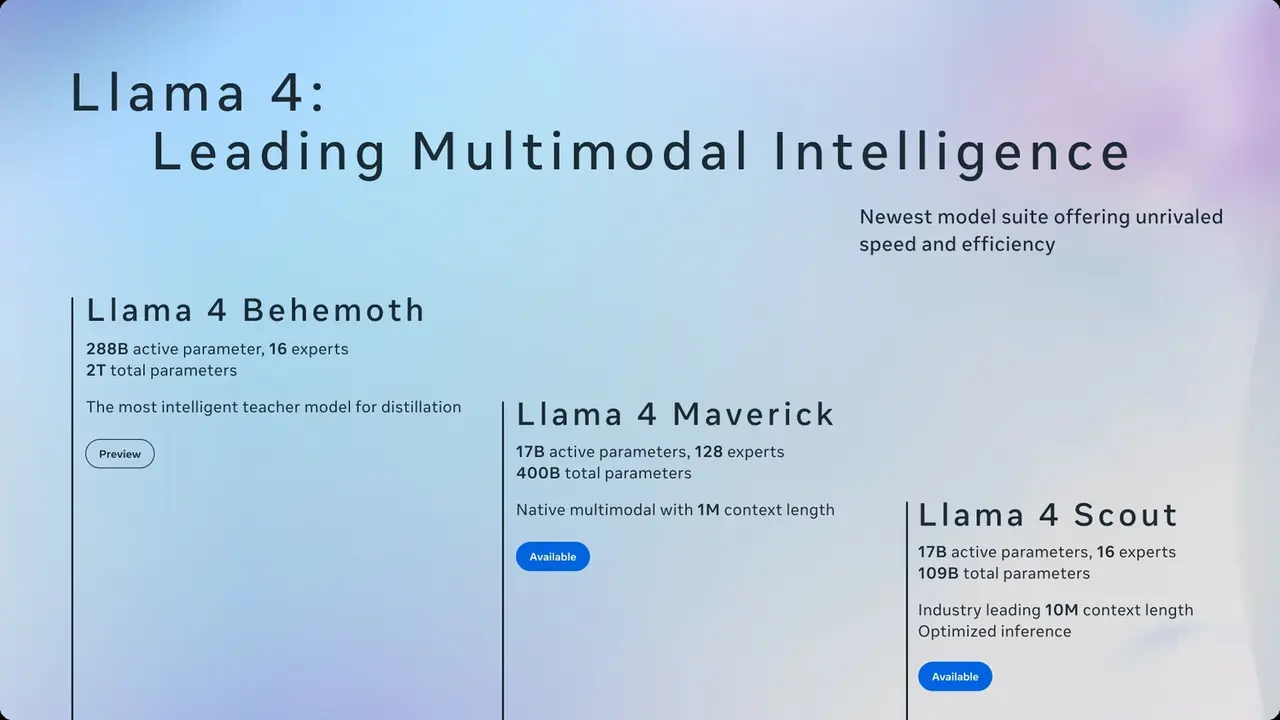

Meta has opened the door to a new era in artificial intelligence with Llama 4. The Llama 4 model, introduced at the launch organized by the company over the weekend, consists of three different structures: Llama 4 Scout, Llama 4 Maverick and Llama 4 Behemoth, which is still in training.

Meta has officially announced Llama 4

The Llama 4 family draws attention with its multimodal structure, trained not only on text but also on visual and video data. The Scout and Maverick models are available on Meta’s official platform Llama.com. The Behemoth model is not yet open to the public. It is stated that this model, which is still in training, is only used in internal tests.

All of the new models work integrated with Meta’s own artificial intelligence-supported applications. The Meta AI assistant on WhatsApp, Messenger and Instagram has been updated to use Llama 4 in 40 countries. The multimodal features will currently only be active in the US and in English.

Meta uses the “Mixture of Experts” (MoE) architecture for the first time in the Llama 4 family. This architecture works by dividing the large model structure into many task-oriented sub-models. Thus, only the relevant expert sub-models are run according to the demand from the user, which increases the efficiency of the system.

For example, Llama 4 Maverick, which has 400 billion total parameters, actively uses only 17 billion parameters. Meta management stated that they have surpassed their competitors such as GPT-4o and Gemini 2.0 with their new models.

So what do you think about this? You can share your opinions with us in the comments section below.