Meta has officially announced its new open-source large language model, Llama 3.1. This model comes in versions with 8B, 70B, and a massive 405B parameters. With a context length of 128K, the 405B version competes with GPT-4 and Claude 3.5 sonnet. Here are the details:

Meta’s Llama 3.1 open-source large language model offerings

Meta’s new Llama 3.1 series introduces significant advancements over previous models. Firstly, the inference capabilities and multilingual support of these models have been substantially improved. Additionally, the context length has been extended to 128K, enabling the models to work more effectively with a much larger amount of information. One of the most notable innovations is the introduction of the flagship model with 405B parameters.

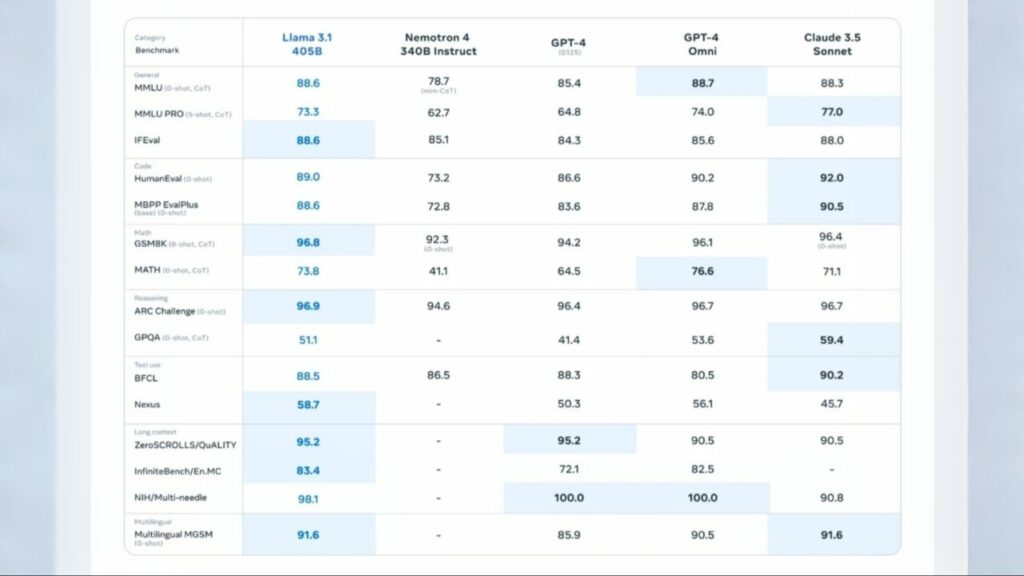

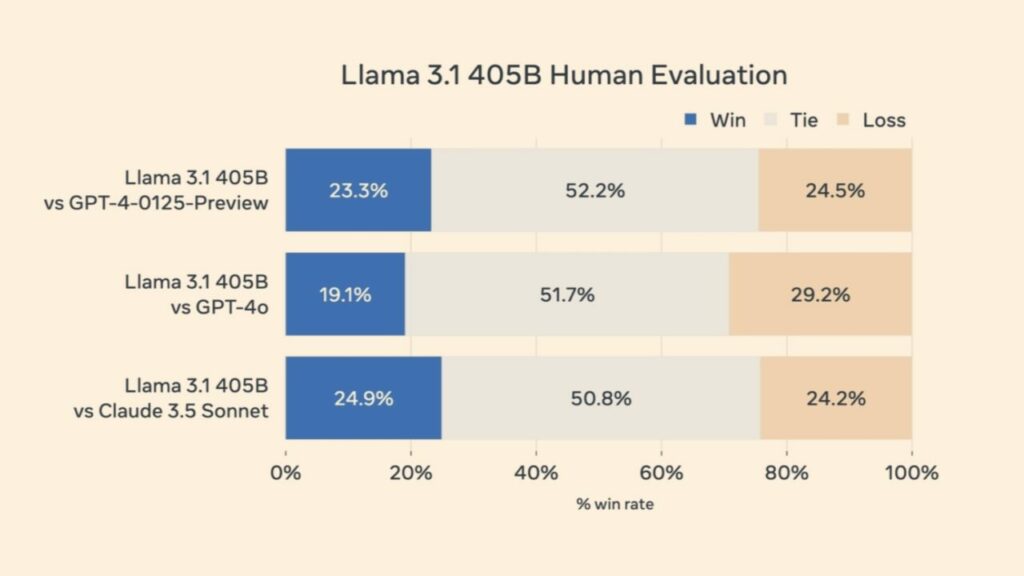

Meta states that the Llama 3.1-405B model, with its 405 billion parameters, is comparable to leading closed-source models like GPT-4, GPT-4o, and Claude 3.5 Sonnet in various tasks such as general knowledge, steerability, mathematics, tool usage, and multilingual translation. This means the Llama 3.1-405B can compete with some of the most powerful models on the market.

Meanwhile, the 8B and 70B parameter Llama 3.1 models are also competitive with other models of similar parameter counts, both closed and open-source. How can you use these models? Llama 3.1 is currently available for download on Meta’s official website and Hugging Face.

Moreover, over 25 partners, including AWS, NVIDIA, Dell, Azure, and Google Cloud, are ready to support this model. This means that developers and researchers interested in using Llama 3.1 have many options available. With its enhanced capabilities and wide range of parameters, Llama 3.1 can make significant contributions to various applications and research fields.

What do you think? Share your thoughts in the comments below.