Mistral has announced an important strategic partnership with Microsoft to create Mistral Large, its newest and largest model of artificial intelligence for the enterprise.

What can we expect from Mistral Large?

Available starting today, Mistral Large is designed as a text generation model that can handle complex multilingual reasoning tasks, including text understanding, transformation and transcoding.

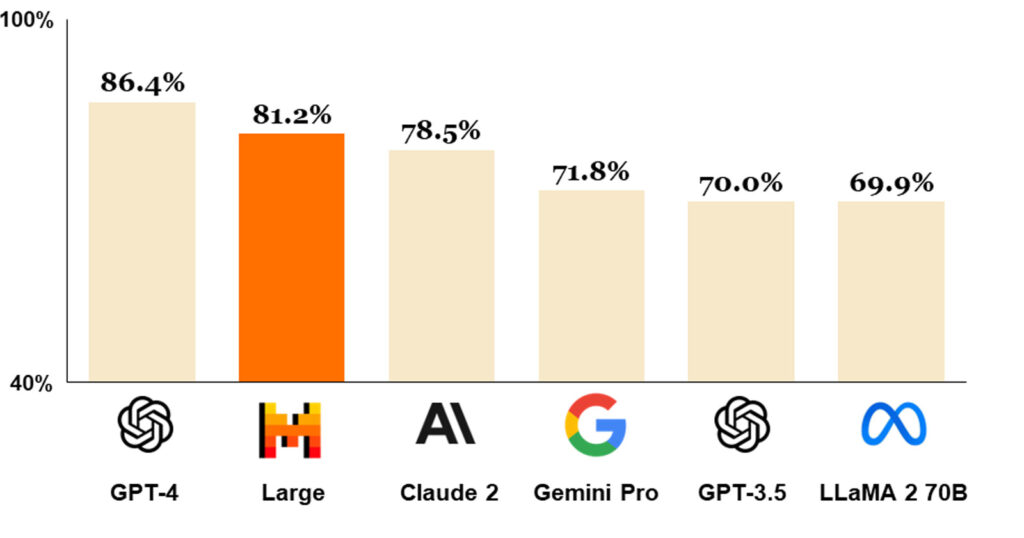

According to MMLU benchmark results shared by the company, the model performs in a way that makes it the second best model generally available through the API after GPT-4.

Mistral says that the larger model will be available primarily through the API, but will also be available through Azure AI thanks to a new partnership with Microsoft. The company has also released a chat app to help businesses get a sense of what the new partnership offers.

As a multilingual model, Mistral Large can understand, reason and produce text with native fluency not only in English but also in other languages such as French, Spanish, German and Italian.

Other companies, such as Google and OpenAI, also offer multilingual models, but Mistral’s ability to understand grammar and cultural context for all languages, including nuanced grammar and cultural context, will yield better results, he emphasizes.

The model has a context window of 32K tokens. It can process large documents and recall information. It also has the ability to follow precise instructions, allowing developers to design their own moderation policies and native function calls.

We will see how Mistral Large performs live, especially when compared to larger models like Gemini 1.5, which supports up to 1 million tokens. Mistral claims that the new large model does a pretty good job against competing models. What do you think? Please don’t forget to share your thoughts with us in the comments section.