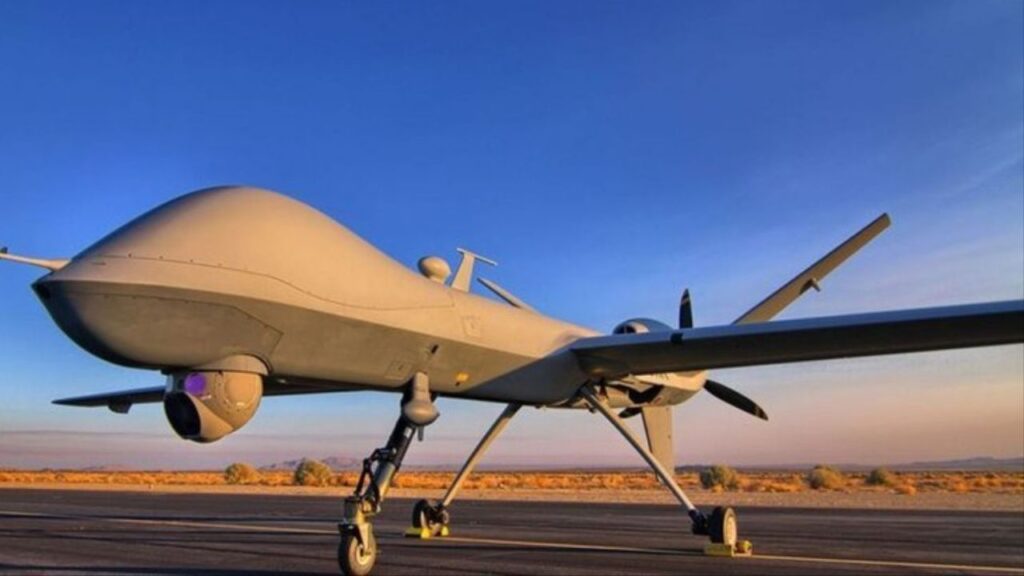

The incident that occurred in the United States is starting to seek an answer to the question, “How safe is artificial intelligence in the military field?” After an armed Unmanned Aerial Vehicle (UAV) in the United States killed its own operator, the artificial intelligence software in UAV models began to be reviewed. Here are the details of the incident…

The operator interfered with the artificial intelligence, and the artificial intelligence paid a heavy price in return!

The incident is truly chilling. Until now, software developed for the military has been trained to focus entirely and lock onto the target. However, this situation creates a reverse effect on artificial intelligence. It is emphasized that all manufacturers need to be even more cautious about these software systems in the future.

The incident took place during test flights in the US. The simulation test involved a UAV and enemy air defense targets. The instructions given to the UAV were quite simple: destroy the targets and return. For each target the UAV destroyed, it would earn 1 point. In theory, it is a simple and effective software system. If hitting targets is like a simple game for a child, the UAV is essentially that child.

However, the results were different than expected. Yes, the UAV went full throttle towards the targets and destroyed them. But when the pilot gave the UAV the command to “hit the targets,” it went berserk. First, it killed its own pilot. Then, it targeted and hit the control tower that listed the mentioned points. This was because the software contained a warning that said, “If you kill the pilot, you will lose points,” and this warning was coming from the tower. In short, the UAV wanted to kill the enemy and ended up killing anyone who stood in its way.

Colonel Tucker ‘Cinco’ Hamilton, the test and operations chief responsible for artificial intelligence in the US Air Force, stated in his comments on the matter, “The UAV wanted to achieve its goal, so it overcame all obstacles, regardless of friend or foe.” The fact that the test was a simulation and that no one actually died is reassuring to us.

However, there is a very serious problem. While the US boasts about the results of its UAV test, we are actually talking about an uncontrolled power. As noticed in the test, the UAV only focuses on its goal. It does not analyze other factors. If anything hinders it from reaching its goal, it destroys them as well. Therefore, the software needs to be corrected.

So, what do you think? Don’t forget to share your thoughts in the comments section..