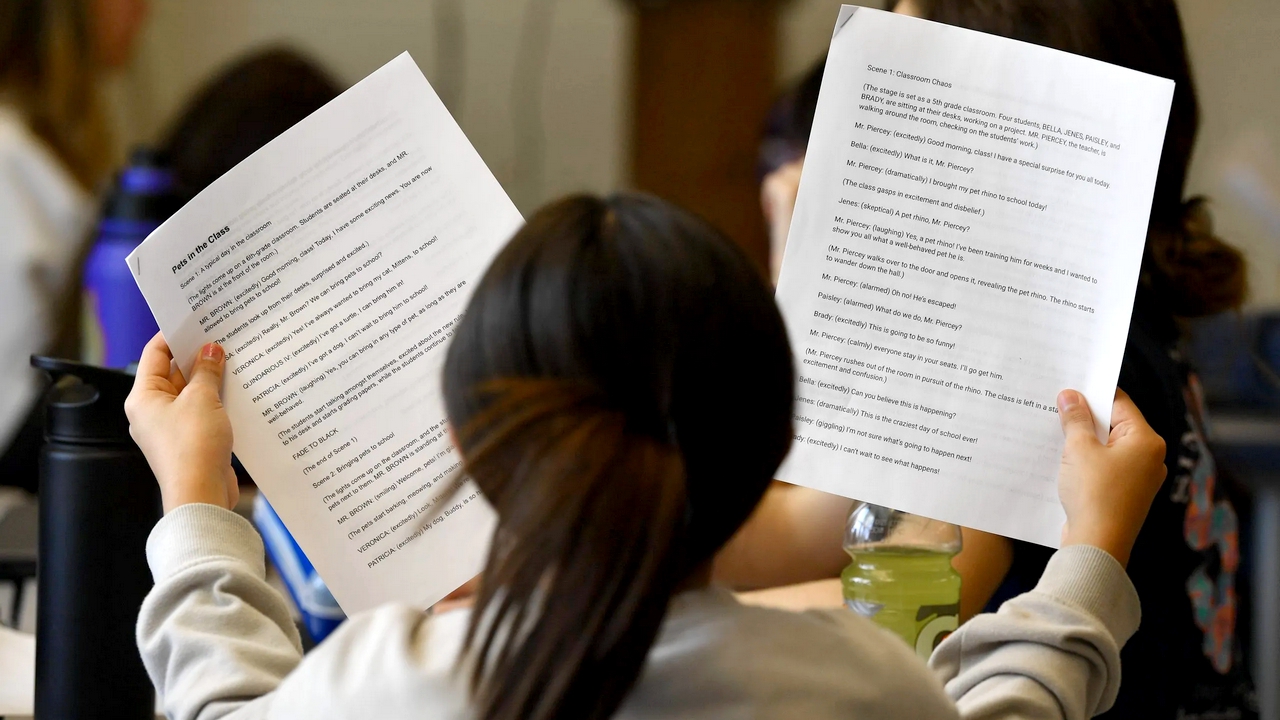

OpenAI has made its final decision regarding the tool it has been working on for some time, which could detect AI-generated content with up to 99% accuracy using ChatGPT. It appears that the company has decided not to release this tool for now, siding with students.

Why Did OpenAI Change Its Decision?

OpenAI has been indecisive about releasing a new tool designed to detect AI-generated content. According to a report by The Wall Street Journal, this tool, which has been in development for nearly two years and has been ready for use for a year, has been shelved once again.

In May 2023, OpenAI mentioned that they had developed three different technologies to detect whether any material (text or image) found online was created using ChatGPT: Text Watermarking, Text Metadata, and C2PA Metadata for AI-Generated Images. Despite having these technologies ready, they have not yet been made available to users.

According to OpenAI, the text watermarking method would allow the identification of assignments or research papers written by ChatGPT. This method would even be able to detect AI involvement if the text is rewritten based on the ChatGPT response.

Recent surveys conducted by OpenAI indicate that if the watermarking method is implemented and it becomes clear that a text was written by AI, the company could lose up to 30% of its users. Likely, OpenAI has shelved this project for now to avoid losing users. We’ll have to see how things develop in the coming period.