Scientists at the University of Minnesota have made a groundbreaking discovery in AI computation. This new hardware device, CRAM (Computational Random Access Memory), can reduce energy consumption for AI applications by a factor of 1000.

CRAM: Reducing AI Energy Consumption by 1000 Times

In traditional AI methods, data continuously travels back and forth between processing units and memory, consuming a significant amount of energy. However, CRAM completely eliminates these energy-intensive transfers. How does it do this? CRAM processes data directly within the memory cells, performing data processing within the memory itself and thus resolving the processing-memory bottleneck.

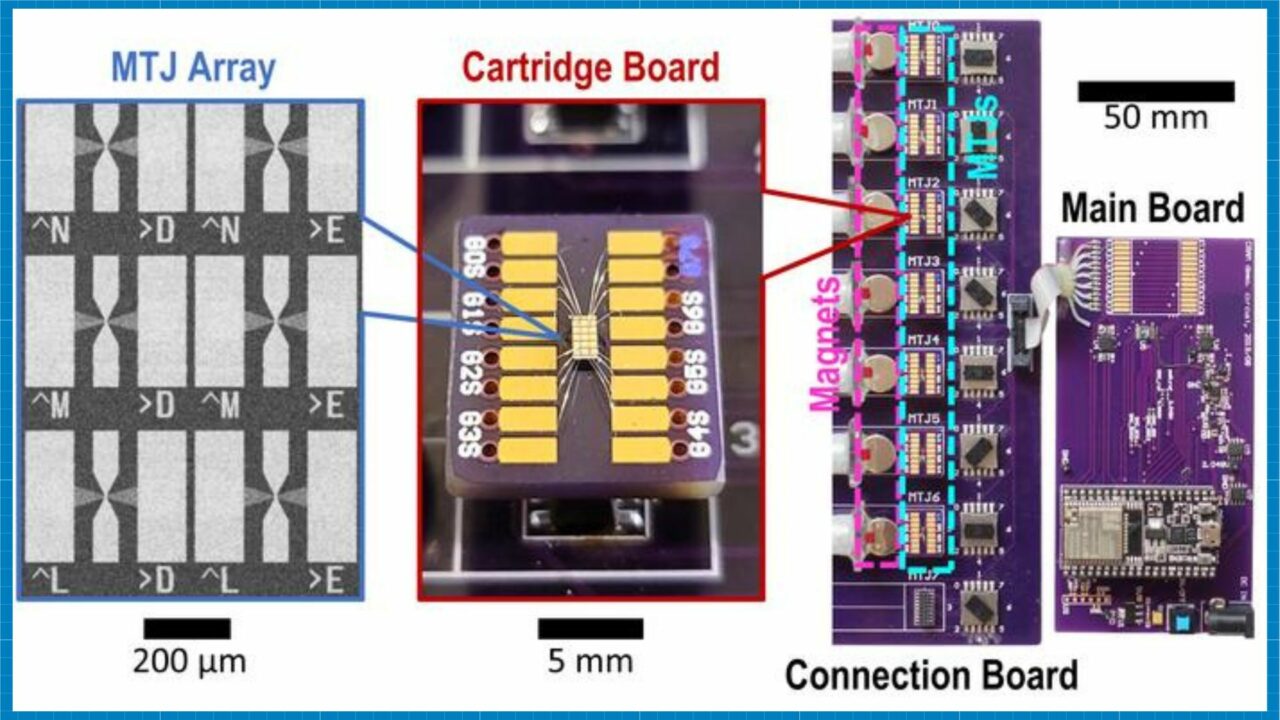

This new device is built on magnetic tunnel junctions (MTJ) and nanostructured devices. These technologies offer several advantages over traditional transistor-based chips: higher speed, lower energy consumption, and resilience to harsh environments.

A CRAM-based machine learning accelerator is estimated to provide up to 2500 times the energy savings compared to traditional methods. This incredible energy efficiency could lead to significant changes in the future of AI. The research team plans to collaborate with semiconductor industry leaders to further develop CRAM technology and advance AI functionality.

Yang Lv, the lead author of the research, says, “This work represents the first experimental demonstration of CRAM where data can be processed without leaving the memory.” The team has been working on this technology for over 20 years and now states that it is ready for real-world applications.

Traditional computer architectures consume a lot of energy during data transfers. CRAM changes this process entirely. With CRAM, data is processed directly within the memory cells, resulting in incredible energy savings. It offers great advantages in terms of both speed and efficiency. You can find the details of the paper here.

What do you think? Share your thoughts in the comments section below.