OpenAI has recently made significant strides in AI safety and risk management. As part of these efforts, they have released the system card (Scorecard) for the GPT-4o model. This card provides a detailed explanation of the strategies used to assess and mitigate potential risks associated with the GPT-4o model. Here are the key highlights of the GPT-4o system card’s contents…

OpenAI Releases System Card Detailing Security Measures for GPT-4o Model

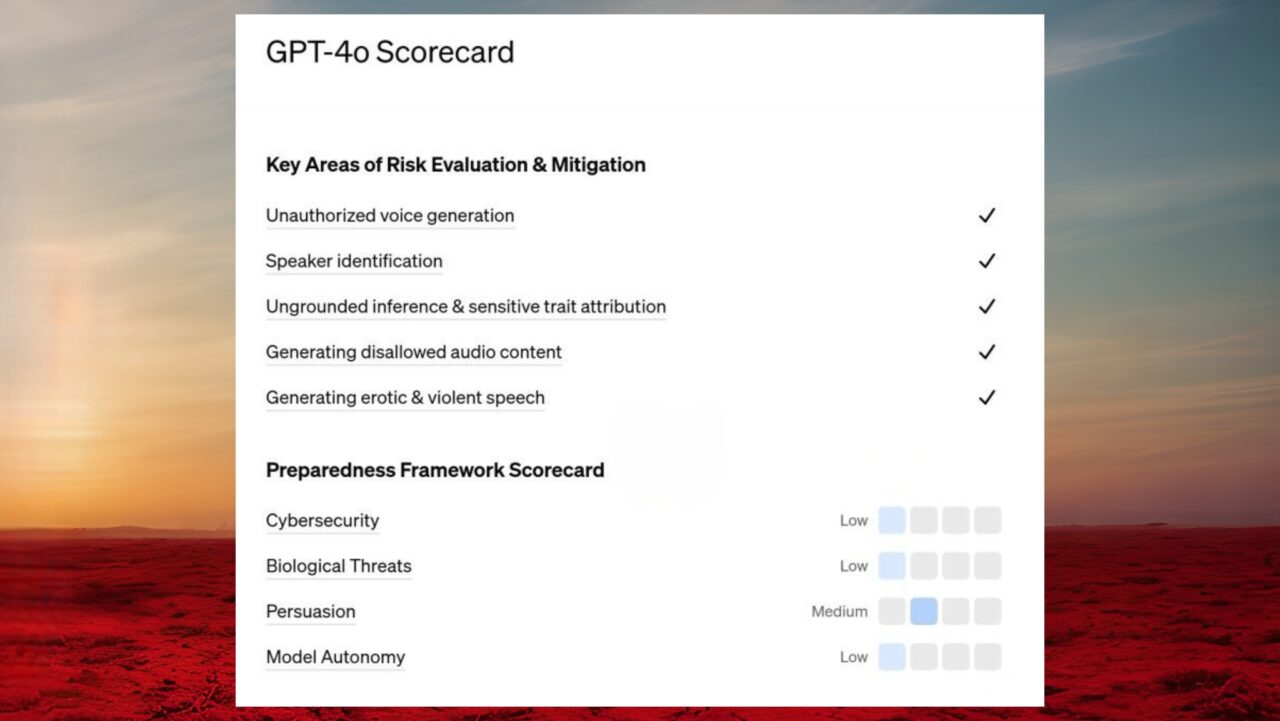

OpenAI uses a “Preparedness Framework” as the foundation for the GPT-4o model, analyzing potential threats posed by AI systems. This framework focuses on identifying risks in areas such as cybersecurity, biological threats, misinformation dissemination, and autonomous behaviors of the model.

To prevent potentially harmful outputs from the model, OpenAI has implemented various security layers using this framework. The safety assessments of the GPT-4o model particularly emphasize voice recognition and voice generation.

The model has been associated with risks like speaker identification, unauthorized voice creation, production of copyrighted content, and misleading audio content. To counter these risks, strict security measures have been implemented in the model’s usage. Specifically, system-level controls have been added to prevent the model from generating certain types of content or engaging in misleading actions.

OpenAI is one of the companies that rigorously conducts security assessments before making the GPT-4o model available to the public. During this process, over 100 external experts conducted various tests on the model to explore its capabilities, identify new potential risks, and evaluate the adequacy of existing security measures. Based on feedback from these experts, the security layers of the model have been further strengthened.

The security measures developed for the GPT-4o model by OpenAI aim to make AI usage safer. The model’s capabilities and security structure will be continuously evaluated and improved before being made available to a wider audience.

With these measures, OpenAI’s efforts to maximize the safety of AI technologies seem poised to set a standard in the field. What do you think about these security measures? Are these precautions sufficient for the safety of AI models? Share your thoughts in the comments below.