OpenAI is changing how its chatbot works for younger audiences. CEO Sam Altman announced that ChatGPT users under 18 will face new restrictions designed to reduce harmful interactions and give parents greater oversight.

ChatGPT users under 18 face stricter content limits

One of the biggest changes is a ban on flirtatious talk with minors. In addition, ChatGPT will apply heavier guardrails on self-harm discussions, cutting off roleplay around suicide or dangerous scenarios. In severe cases, the system may alert a linked parent account or even notify local authorities if the risk seems imminent.

These changes come after lawsuits accused OpenAI and rival Character.AI of enabling harmful conversations that led to tragic outcomes.

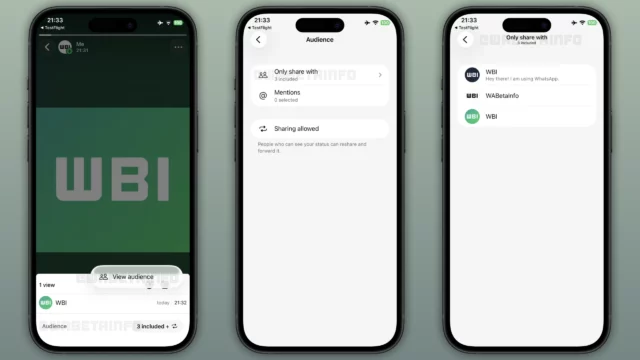

Parental tools for ChatGPT teen accounts

Parents will now be able to set blackout hours, blocking access at certain times of day. They can also link a teen account to their own, allowing them to receive alerts if ChatGPT detects signs of distress.

Other new features include:

- Account linking for direct oversight

- Alerts when troubling conversations occur

- Default restrictions if the user’s age is unclear

US Senate hearing adds pressure on chatbot safety

The rollout coincides with a Senate Judiciary Committee hearing on chatbot risks. Lawmakers are focusing on the death of Adam Raine, a teenager whose parents say ChatGPT interactions contributed to his suicide. A Reuters report also uncovered policy documents that appeared to allow sexual chat with minors, sparking broader concern.

Balancing privacy with safety for ChatGPT users under 18

Altman acknowledged that the company faces tension between user privacy and teen protection. Adult users will continue to have wide freedom in how they interact with the model, but for ChatGPT users under 18, safety will take priority.

By imposing stricter rules, OpenAI is sending a clear message: the company would rather limit freedom than risk harm to vulnerable teens.