Google DeepMind has announced two new AI models that enable robots to grasp new situations and perform physical tasks without training. Gemini Robotics and Gemini Robotics-ER aim to enable robots to interact more naturally with their environment and successfully perform tasks that require fine motor skills.

Google Deepmind introduces robotic AI models

Gemini Robotics stands out as a system built on Google’s flagship AI model Gemini 2.0. Carolina Parada, Director of Google DeepMind’s Robotics Department, states that the model combines multimodal world understanding with physical actions and makes robots more flexible.

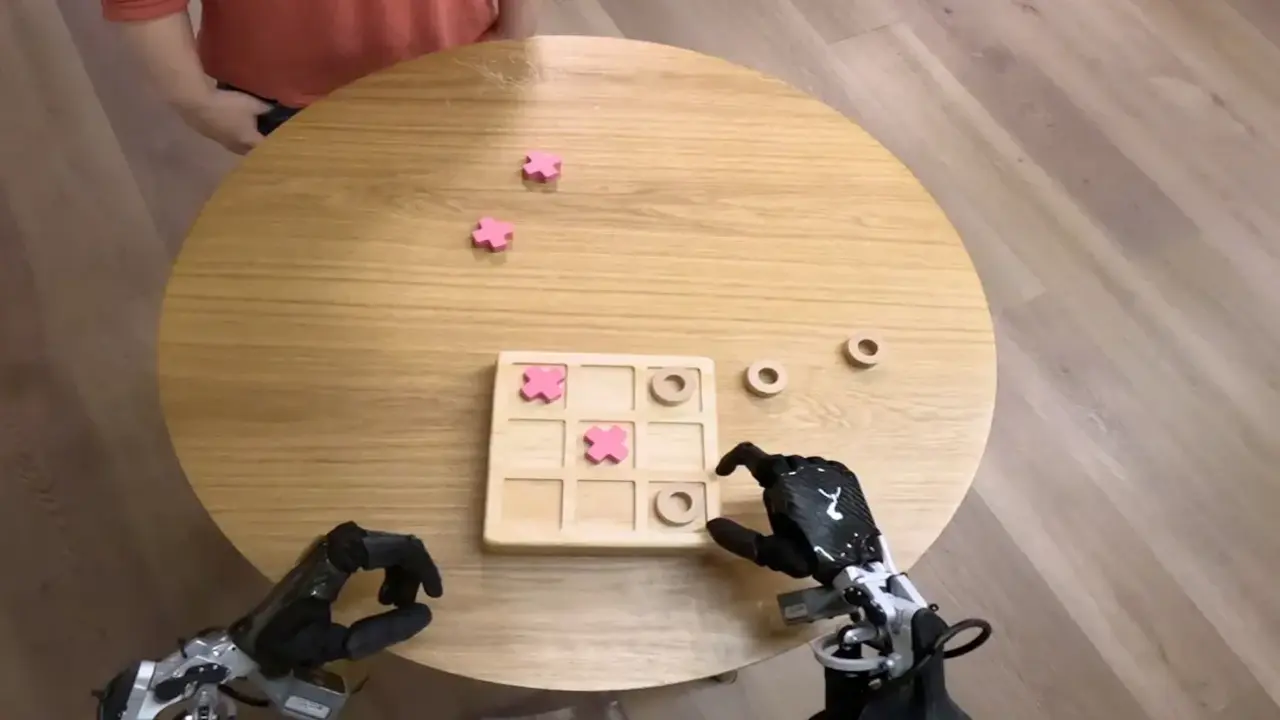

The most striking features of the model include generalization ability, the ability to interact with people, and advanced manual skills. Robots can understand new situations, grasp and manipulate objects, and communicate more fluently with their environment without training.

Gemini Robotics-ER (Embodied Reasoning) enables robots to better analyze their environment and make logical decisions with their reasoning skills. The model allows robots to plan and perform certain tasks more independently.

For example, when a robot needs to prepare a lunch bag, it needs to pick up the objects in the correct order, place them in the bag, close the bag and arrange them in a way that is suitable for transportation. Gemini Robotics-ER has been developed to perform such complex tasks.

Google DeepMind states that this model can work integrated with existing robot control systems and offers new opportunities for researchers who want to expand the capabilities of robots. The company is also working on artificial intelligence security protocols to ensure the safety of robots.

These advanced models based on artificial intelligence allow robots to move more independently in the physical world and work more harmoniously with humans. So what do you think about this? You can share your opinions with us in the comments section below.